Hi All,

I have an idea that I'd like to run past those running the Mix Challenge. At present it seems that only the top 15 mixes get feedback. This is understandable due to time considerations however it means that those not selected (the vast majority) don't get feedback, and yet they're the ones that probably need it most.

So, I was thinking that a more structured mix evaluation process could help in two ways - firstly, all entrants would get some feedback, and secondly, the person evaluating the mix would have a clear method that they could use which might even make the process quicker.

I was thinking of something like the following. Have a set of criteria that the song provider uses to evaluate the mixes and rate these criteria for each mix. As an example:

Tonal Balance

Internal Balances

Dynamics/Transient Clarity

Imaging

Aesthetic Choices (e.g. dry/wet, etc.)

These criteria are just examples of what I would use and are what a mastering engineer would initially listen for. The song provider would listen to each mix and rate each of the categories (say, out of 10). For example:

Mix 1

=====

Tonal Balance 6

Internal Balances 4

Dynamics/Transient Clarity 7

Imaging 5

Aesthetic Choices 8

Total: 30

At the end of the listening process, the top scoring mixes would go through to the second round.

So why would this be useful? I think for two reasons. In a lot of cases I imagine that the song provider isn't a mixer (they're songwriters/musicians?) so having some objective criteria would help them to identify particular mix characteristics that they might not be aware of. And if they're thinking in terms of specific criteria they might only need to listen to the mix once.

Secondly, it would really help those of us who don't make the second round. Say a mix gets a 2/10 for tonal balance and one of the top 15 gets 9/10. The person who mixed the 2/10 mix could listen to the 9/10 mix and identify the tonal differences. This would be a huge help!

Anyway, that's my 2c! I'm interested in hearing what others think about this idea.

Cheers!

2025-MAY-14 Info: Join SWC093 and create a production in any Electronic music sub-genre you feel like, fitting to the given premise.

Mix Challenge - General Gossip Thread

- Mister Fox

- Site Admin

- Posts: 3564

- Joined: Fri Mar 31, 2017 16:15 CEST

- Location: Berlin, Germany

Mix Challenge - General Gossip Thread

Please do keep the following in mind.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETI have an idea that I'd like to run past those running the Mix Challenge. At present it seems that only the top 15 mixes get feedback. This is understandable due to time considerations however it means that those not selected (the vast majority) don't get feedback, and yet they're the ones that probably need it most.

The Mix Challenge audio community is already special with providing feedback in the first place, and running a second round on top of that. Most "challenges" or competitions out there with way more strict rules and thousands on entrants do not provide any feedback at all. You will only get to know if you made it, or not. Mostly only a Top 5.

Also - I can only ask so much of each Song Provider. There is a lot of communication going on behind the scenes to keep the game running and explaining things with every step along the way. I am fairly positive that other song providers like @BenjiRage, @JeroenZuiderwijk, @Jorgeelalto, @Mellow Browne and currently @Edling can attest to that.

Some Song Providers already did that themselves - as in, their own method of weighting submitted entries.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETSo, I was thinking that a more structured mix evaluation process could help in two ways - firstly, all entrants would get some feedback, and secondly, the person evaluating the mix would have a clear method that they could use which might even make the process quicker.

<example with numeric weighting>

At the end of the listening process, the top scoring mixes would go through to the second round.

Introducing this as a defacto / strict game mechanic would mean more interaction from my end, also preparing and explaining things in minute detail (rule book, which is already heavily skimmed -- even the TL;DR version!), sometimes multiple times over, etc.

I try to keep things as simple as possible within the overall confinements of the game handling. A numeric weighting which would also "declare the top participants" wouldn't really help IMHO.

Isn't that already happening? Participants already dig deeper after the Mix Round 2 participants are announced.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETSecondly, it would really help those of us who don't make the second round. Say a mix gets a 2/10 for tonal balance and one of the top 15 gets 9/10. The person who mixed the 2/10 mix could listen to the 9/10 mix and identify the tonal differences. This would be a huge help!

In fact, you started a conversation about one of the Top 15 entries in here, and asked whether or not this was within the given game parameters.

Since our Song Pool is pretty much dry (no really, there is currently nothing after April!)...

Are you willing to step up to the plate and put your concept to the test?

If the answer is "yes", then please reach out with a demo mix, ideally also already have multi-tracks prepared, then we can sort things out in the coming days/weeks.

Re: Mix Challenge - General Gossip Thread

Mister Fox wrote: ↑Fri Mar 21, 2025 03:44 CETPlease do keep the following in mind.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETI have an idea that I'd like to run past those running the Mix Challenge. At present it seems that only the top 15 mixes get feedback. This is understandable due to time considerations however it means that those not selected (the vast majority) don't get feedback, and yet they're the ones that probably need it most.

The Mix Challenge audio community is already special with providing feedback in the first place, and running a second round on top of that. Most "challenges" or competitions out there with way more strict rules and thousands on entrants do not provide any feedback at all. You will only get to know if you made it, or not. Mostly only a Top 5.

I've always thought that Mix Challenge was different to the other "challenges" because its focus was more on education whereas the other sites you mention are primarily competitions (and usually promotions of microphones, etc.)

Also - I can only ask so much of each Song Provider. There is a lot of communication going on behind the scenes to keep the game running and explaining things with every step along the way. I am fairly positive that other song providers like @BenjiRage, @JeroenZuiderwijk, @Jorgeelalto, @Mellow Browne and currently @Edling can attest to that.

Some Song Providers already did that themselves - as in, their own method of weighting submitted entries.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETSo, I was thinking that a more structured mix evaluation process could help in two ways - firstly, all entrants would get some feedback, and secondly, the person evaluating the mix would have a clear method that they could use which might even make the process quicker.

<example with numeric weighting>

At the end of the listening process, the top scoring mixes would go through to the second round.

Introducing this as a defacto / strict game mechanic would mean more interaction from my end, also preparing and explaining things in minute detail (rule book, which is already heavily skimmed -- even the TL;DR version!), sometimes multiple times over, etc.

I try to keep things as simple as possible within the overall confinements of the game handling. A numeric weighting which would also "declare the top participants" wouldn't really help IMHO.

I actually think it might mean less interaction because you would only have to set it up once. All it would entail would be a write-up of the criteria. And regarding the numeric weighting, I thought that the participants would get the full score breakdown not just the total.

Isn't that already happening? Participants already dig deeper after the Mix Round 2 participants are announced.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETSecondly, it would really help those of us who don't make the second round. Say a mix gets a 2/10 for tonal balance and one of the top 15 gets 9/10. The person who mixed the 2/10 mix could listen to the 9/10 mix and identify the tonal differences. This would be a huge help!

Not really because it's guesswork on the part of the participant as to why their mix didn't make round 2.

In fact, you started a conversation about one of the Top 15 entries in here, and asked whether or not this was within the given game parameters.

I think that's a different issue altogether. The reason I asked was because I work in a similar way but thought it was outside the rules. I actually looked up Master Plan because I hadn't heard of it. In the Sound On Sound review they describe it as "An impressive‑sounding limiter‑centred mastering chain". I was just interested in how such a processor was viewed in regards to the rules.

Since our Song Pool is pretty much dry (no really, there is currently nothing after April!)...

Are you willing to step up to the plate and put your concept to the test?

Sorry but even though I have a music background currently I'm trying to improve my mixing skills and would like to focus on that for a while. Production is a whole other ball game.

If the answer is "yes", then please reach out with a demo mix, ideally also already have multi-tracks prepared, then we can sort things out in the coming days/weeks.

Re: Mix Challenge - General Gossip Thread

Sorry I stuffed up the quoting in the previous message - my replies are in italics if that helps.

Cheers!

Cheers!

- BenjiRage

- Wild Card x1

- Posts: 104

- Joined: Thu Apr 13, 2023 03:34 CEST

- Location: Harrogate, UK

- Contact:

Re: Mix Challenge - General Gossip Thread

Absolutely, lots of admin and back-and-forth going on behind the scenes!Mister Fox wrote: ↑Fri Mar 21, 2025 03:44 CETAlso - I can only ask so much of each Song Provider. There is a lot of communication going on behind the scenes to keep the game running and explaining things with every step along the way. I am fairly positive that other song providers like @BenjiRage, @JeroenZuiderwijk, @Jorgeelalto, @Mellow Browne and currently @Edling can attest to that.

Regarding this, I've observed in some of the challenges the song providers are very focussed on technical aspects, but in others they are more subjectively centred on how the mixers have understood the material; so the ability to be fluid and unrestricted in providing feedback I think is quite key. While fixed criteria might make it a little quicker to provide, I think it would also greatly limit the scope of the feedback to the detriment of both the provider and the mixer.cpsmusic wrote: ↑Fri Mar 21, 2025 02:16 CETSo, I was thinking that a more structured mix evaluation process could help in two ways - firstly, all entrants would get some feedback, and secondly, the person evaluating the mix would have a clear method that they could use which might even make the process quicker.

I was thinking of something like the following. Have a set of criteria that the song provider uses to evaluate the mixes and rate these criteria for each mix. As an example...

Re: Mix Challenge - General Gossip Thread

Agreed but that's why I suggested having technical and aesthetic criteria. Part of the problem is that without feedback, it's not even clear whether the song provider rejected the mix on technical or aesthetic grounds.Regarding this, I've observed in some of the challenges the song providers are very focussed on technical aspects, but in others they are more subjectively centred on how the mixers have understood the material; so the ability to be fluid and unrestricted in providing feedback I think is quite key. While fixed criteria might make it a little quicker to provide, I think it would also greatly limit the scope of the feedback to the detriment of both the provider and the mixer.

Anyway, the idea of fixed criteria was just a thought but if no-one's interested it can be binned.

Cheers!

Re: Mix Challenge - General Gossip Thread

Personally I think a numeric scoring system risks doing more harm than good.

First off, most song providers are not professional mix-engineers and do not score the mix on craftsmanship, they score the mix on the basis on how much they like it. Thus the scoring is in itself very subjective, and you can't say that a tonal balance of 8 makes the mix better from a technical point of view than a tonal balance of 3, only that the song provider like the tonal balance of the first mix better.

Secondly, the experience level of participants vary greatly. Some participants have just started mixing, while others have lots of experience. Using a linear scoring system would in my opinion only risk to boost the confidence of those that do not need it, and discourage newcomers who needs some boosting.

To me, feedback is unique to each submission, that feedback needs to be based on the experience level of the participant, and all feedback needs to contain positives with what was good in the mix, regardless of experience level, as well as things that can be improved on in the future. A first comer getting 1-2/10 in every score will most likely not come back for a second challenge.

First off, most song providers are not professional mix-engineers and do not score the mix on craftsmanship, they score the mix on the basis on how much they like it. Thus the scoring is in itself very subjective, and you can't say that a tonal balance of 8 makes the mix better from a technical point of view than a tonal balance of 3, only that the song provider like the tonal balance of the first mix better.

Secondly, the experience level of participants vary greatly. Some participants have just started mixing, while others have lots of experience. Using a linear scoring system would in my opinion only risk to boost the confidence of those that do not need it, and discourage newcomers who needs some boosting.

To me, feedback is unique to each submission, that feedback needs to be based on the experience level of the participant, and all feedback needs to contain positives with what was good in the mix, regardless of experience level, as well as things that can be improved on in the future. A first comer getting 1-2/10 in every score will most likely not come back for a second challenge.

Old-school mixer that started out in -95 on tape-machines and analog desks. Today mostly in-the-box. Big UAD fanboy

Re: Mix Challenge - General Gossip Thread

I didn't mean that an "8" makes something better, just that that criteria was closer to the idea that the song provider had in mind. That way the mixer at least gets some idea of what the song provider did or didn't like about the mix.you can't say that a tonal balance of 8 makes the mix better from a technical point of view than a tonal balance of 3, only that the song provider like the tonal balance of the first mix better.

The problem I see is that in most cases only the top 15 get feedback and everyone else gets none. Maybe Mr Fox could let us know how many of say the last 10 challenges have had feedback for everyone - it seems to be happening less and less. And if Mix Challenge grows to, say, 150 or 200+ participants there's no way everyone will get feedback.feedback is unique to each submission, that feedback needs to be based on the experience level of the participant, and all feedback needs to contain positives with what was good in the mix, regardless of experience level, as well as things that can be improved on in the future. A first comer getting 1-2/10 in every score will most likely not come back for a second challenge.

And regarding

maybe I'm a bit old-school, but when I had piano lessons I wanted the teacher to point out what I was doing wrong so that I could think about ways of fixing it - that's the path to improvement. And if I did something well, then that was great but it didn't help me improve,A first comer getting 1-2/10

- Mister Fox

- Site Admin

- Posts: 3564

- Joined: Fri Mar 31, 2017 16:15 CEST

- Location: Berlin, Germany

Mix Challenge - General Gossip Thread

I'll post one more follow-up to this question / suggestion, and then I will drop the topic.

There seems to be some misconception of how the Mix Challenge being presented and run. There's a lot going on behind the scenes that most of the participants do not see - things that ensure the games run smoothly, while also being fair to all participants. Things that eat hours of sometimes very limited spare-time.

This is a community that is free to access for everyone - I do not make a dime from this, yet I seem to invest the most (which is time). So if I say "no" to a certain topic - please accept that.

At heart, it is still an educational experience simulating a "client to business scenario" (mass mix engineer shootout) without the risk of severe repercussions for "making mistakes" (which can result in you loosing jobs). It is also about learning how to document your edits (a sadly forgotten thing) and sharing knowledge / tricks (which can be parsed from the documentation). Due to both the learning aspect that "making mistakes will have repercussions" and the offered "bonus licenses" - this game has to have rules like a regular competition.

What sets the Mix Challenge apart from what is out there, is that you get feedback in the first place, and you are encouraged to share both knowledge and "learn, by doing".

Not solely limited to: creating the templates (something that works cross-platform, that can also be easily presented to everyone), writing up a set of rules on top of the already extensive ones, explaining the rules with each game (for the Song Provider and the participants), trying to keep the Song Provider engaged especially with a system that this individual might not be used to, answering technical questions on the forum, Discord, Email, etc.

This wouldn't simplify things, this wouldn't result in more feedback - this would only result in exponentially extra work that I do not have the time and patience for.

Not to mention - a lot of users already consider the games to be "too complicated", and never participate in the first place due to that.

I make it clear with every game that only the Top 15 participants will get feedback - everything else is bonus. And if I know this decision by the Song Provider beforehand, I will announce it (I started this with MC081 in October 2021). The Rule Book also states that the Song Provider is under no obligation to do more than that.

I apologize for any inconvenience and disappointment this may cause.

Doesn't mean that the community can't chime in and provide additional commentary. In fact, while checking the last 40 games, I've seen your name pop up multiple times offering follow-up feedback (so did other community regulars). Again, this is what sets the Mix Challenge apart to what out there - the interaction. And I can't thank our community regulars enough for continuing to do that.

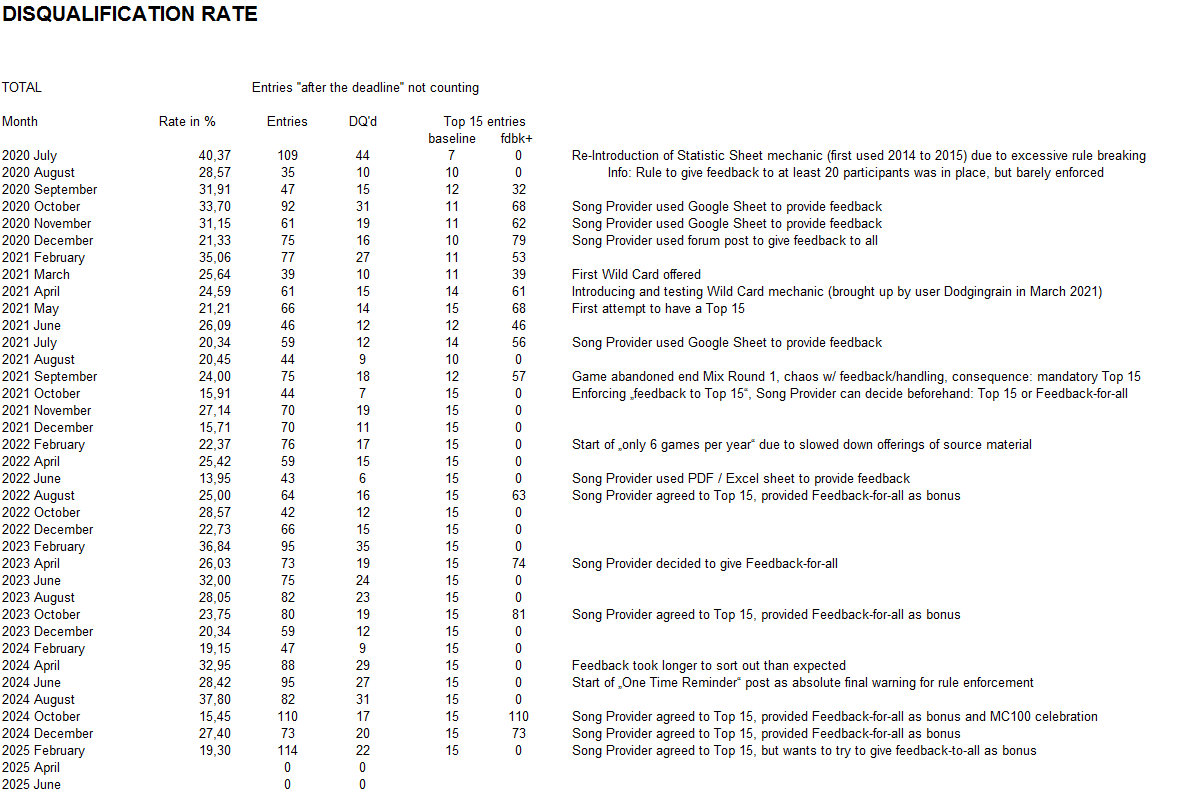

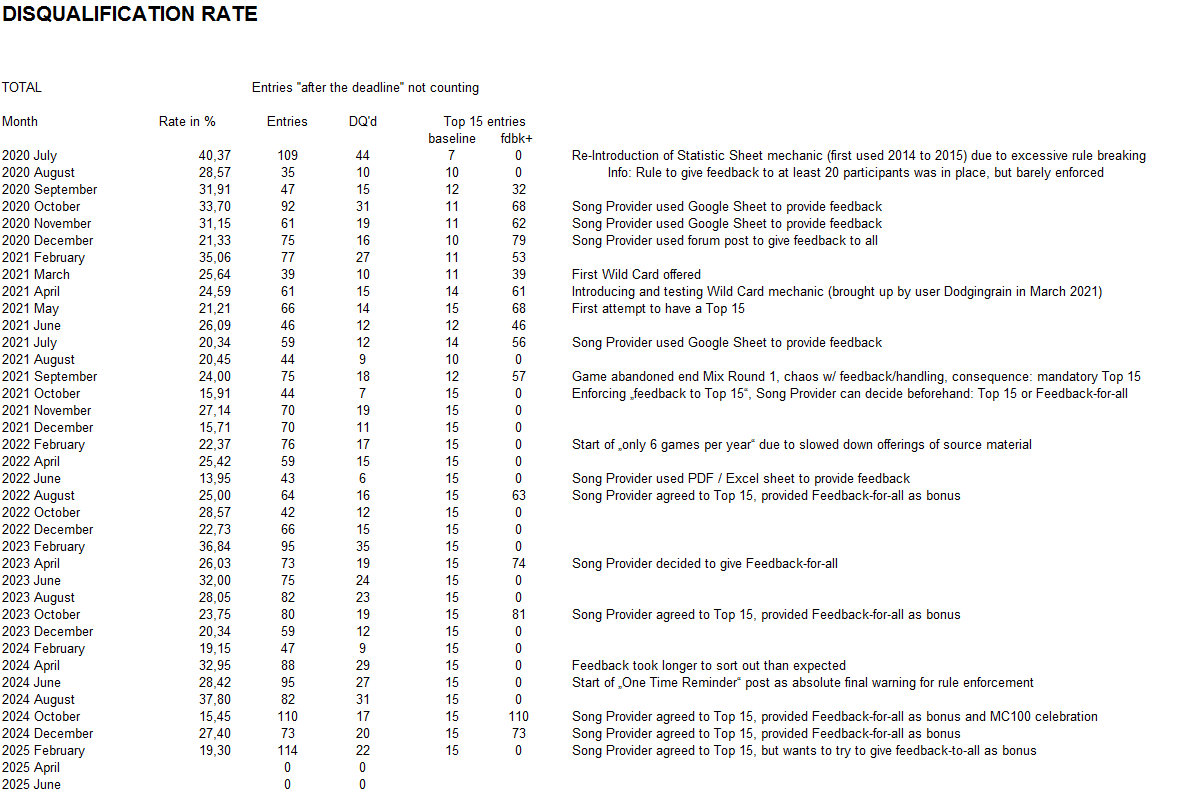

I invested a couple of hours (think 3-4) to read up on old threads until at least MC067 / July 2020 - and now I can give you detailed results for the last 36 games, or a bit more than 4,25 years of collecting additional data.

I hope this satisfies your curiosity.

Until about Mix Challenge 030, there was no definite guideline regarding "giving feedback". There was an attempt to a least provide that to the participants which will go into Mix Round 2. However, that was all over the place. Starting with going independent (April 2021/MC031), there was a definite rule in place to at least give feedback to 20 participants, and have at least 10 to 15 participants go into Mix Round 2. Enforcing this, was not easy. There were constant debates about lack of feedback (sometimes there was feedback for nearly all, sometimes only half of the participants, sometimes only the bare minimum), how to "interpret" rules (I wrote that in quotation marks on purpose), etc.

I drew a clear line in the sand with MC067 / July 2021, reintroduced the "Statistic Sheet" to the public after excessive rule breaking and never ending debates. I also set new ground rules, adjusted others, tested and later implemented additional game mechanics (e.g. the Wild Card mechanic, Mix Round 3, etc), and was still very, very accommodating / lenient towards participants. Often to this very day.

The Mix Challenge was never set to provide feedback to all participants, just more than what you might see at other places (which was a very, very low bar to begin with).

The Songwriting Competition is a different beast - but even here, there are clear rules for a "bare minimum".

We are really out of new source material for future Mix(ing) Challenges, and I can only do so much in terms of reaching out.

There seems to be some misconception of how the Mix Challenge being presented and run. There's a lot going on behind the scenes that most of the participants do not see - things that ensure the games run smoothly, while also being fair to all participants. Things that eat hours of sometimes very limited spare-time.

This is a community that is free to access for everyone - I do not make a dime from this, yet I seem to invest the most (which is time). So if I say "no" to a certain topic - please accept that.

The Mix Challenge has always been about "sharing knowledge", even before becoming fully independent in April 2017.

At heart, it is still an educational experience simulating a "client to business scenario" (mass mix engineer shootout) without the risk of severe repercussions for "making mistakes" (which can result in you loosing jobs). It is also about learning how to document your edits (a sadly forgotten thing) and sharing knowledge / tricks (which can be parsed from the documentation). Due to both the learning aspect that "making mistakes will have repercussions" and the offered "bonus licenses" - this game has to have rules like a regular competition.

What sets the Mix Challenge apart from what is out there, is that you get feedback in the first place, and you are encouraged to share both knowledge and "learn, by doing".

You have no idea how much extra work this would mean.cpsmusic wrote: ↑Fri Mar 21, 2025 05:39 CETI actually think it might mean less interaction because you would only have to set it up once. All it would entail would be a write-up of the criteria. And regarding the numeric weighting, I thought that the participants would get the full score breakdown not just the total.

Not solely limited to: creating the templates (something that works cross-platform, that can also be easily presented to everyone), writing up a set of rules on top of the already extensive ones, explaining the rules with each game (for the Song Provider and the participants), trying to keep the Song Provider engaged especially with a system that this individual might not be used to, answering technical questions on the forum, Discord, Email, etc.

This wouldn't simplify things, this wouldn't result in more feedback - this would only result in exponentially extra work that I do not have the time and patience for.

Not to mention - a lot of users already consider the games to be "too complicated", and never participate in the first place due to that.

I'm sorry - but this is what the Statistic Sheet is for.Mister Fox wrote: ↑Fri Mar 21, 2025 03:44 CETIsn't that already happening? Participants already dig deeper after the Mix Round 2 participants are announced.

I make it clear with every game that only the Top 15 participants will get feedback - everything else is bonus. And if I know this decision by the Song Provider beforehand, I will announce it (I started this with MC081 in October 2021). The Rule Book also states that the Song Provider is under no obligation to do more than that.

I apologize for any inconvenience and disappointment this may cause.

Doesn't mean that the community can't chime in and provide additional commentary. In fact, while checking the last 40 games, I've seen your name pop up multiple times offering follow-up feedback (so did other community regulars). Again, this is what sets the Mix Challenge apart to what out there - the interaction. And I can't thank our community regulars enough for continuing to do that.

I have always been open about the statistics I create (from which there are plenty: like the participation amount, Statistic Sheets, Disqualification Rate, etc). However, this was never really on my radar - until now.

I invested a couple of hours (think 3-4) to read up on old threads until at least MC067 / July 2020 - and now I can give you detailed results for the last 36 games, or a bit more than 4,25 years of collecting additional data.

I hope this satisfies your curiosity.

This is where there seem to be some misconceptions that "giving feedback-for-all was always the case". I unfortunately have to burst that bubble.cpsmusic wrote: ↑Fri Mar 21, 2025 13:40 CETThe problem I see is that in most cases only the top 15 get feedback and everyone else gets none. Maybe Mr Fox could let us know how many of say the last 10 challenges have had feedback for everyone - it seems to be happening less and less. And if Mix Challenge grows to, say, 150 or 200+ participants there's no way everyone will get feedback.

Until about Mix Challenge 030, there was no definite guideline regarding "giving feedback". There was an attempt to a least provide that to the participants which will go into Mix Round 2. However, that was all over the place. Starting with going independent (April 2021/MC031), there was a definite rule in place to at least give feedback to 20 participants, and have at least 10 to 15 participants go into Mix Round 2. Enforcing this, was not easy. There were constant debates about lack of feedback (sometimes there was feedback for nearly all, sometimes only half of the participants, sometimes only the bare minimum), how to "interpret" rules (I wrote that in quotation marks on purpose), etc.

I drew a clear line in the sand with MC067 / July 2021, reintroduced the "Statistic Sheet" to the public after excessive rule breaking and never ending debates. I also set new ground rules, adjusted others, tested and later implemented additional game mechanics (e.g. the Wild Card mechanic, Mix Round 3, etc), and was still very, very accommodating / lenient towards participants. Often to this very day.

The Mix Challenge was never set to provide feedback to all participants, just more than what you might see at other places (which was a very, very low bar to begin with).

The Songwriting Competition is a different beast - but even here, there are clear rules for a "bare minimum".

What a shame.

We are really out of new source material for future Mix(ing) Challenges, and I can only do so much in terms of reaching out.

Re: Mix Challenge - General Gossip Thread

That's not what I said - what I said was it would be better if everyone were given feedback.This is where there seem to be some misconceptions that "giving feedback-for-all was always the case". I unfortunately have to burst that bubble.

The statistic sheet is only feedback in so far as whether you are DQ-ed or not. I'm referring to those who don't make round 2 but also aren't DQ-ed.I'm sorry - but this is what the Statistic Sheet is for.

Yes, and I can tell you that for the most part the response to "I'll review yours if you review mine" has been very disappointing - no-one seems interested and when I asked the reason why it was suggested that what people really want is the thoughts of the song provider, not mine.I've seen your name pop up multiple times offering follow-up feedback (so did other community regulars). Again, this is what sets the Mix Challenge apart to what out there - the interaction. And I can't thank our community regulars enough for continuing to do that.

Anyway, lets draw a line under this. It was just an idea and if others think there's no merit in it, c'est la vie!

Cheers!